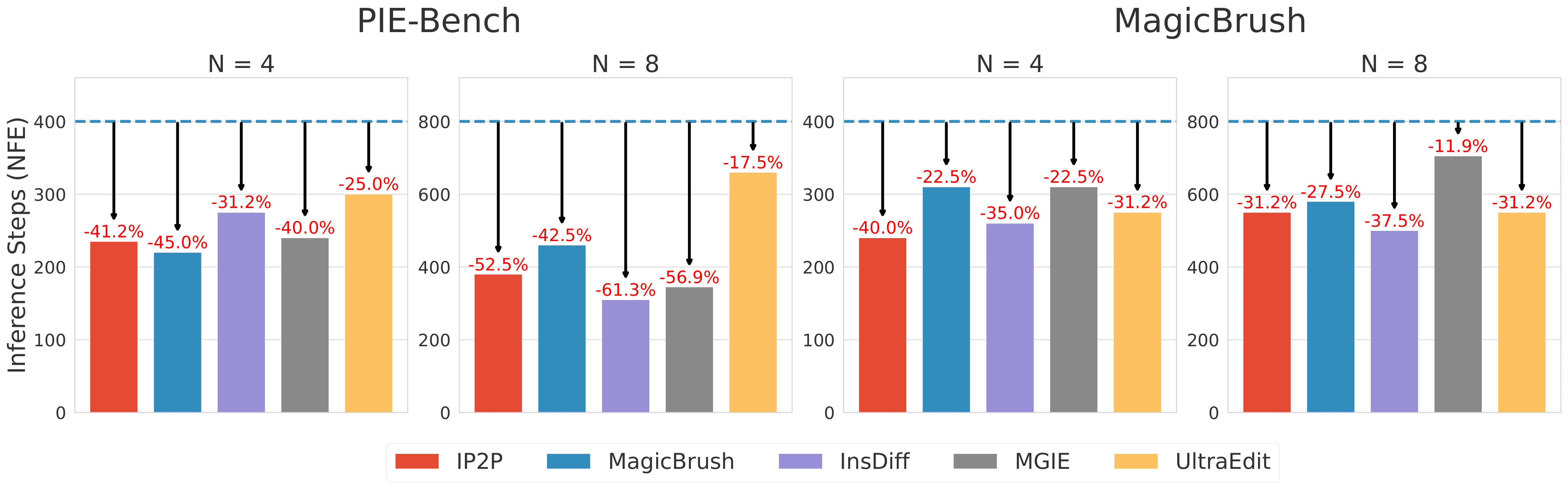

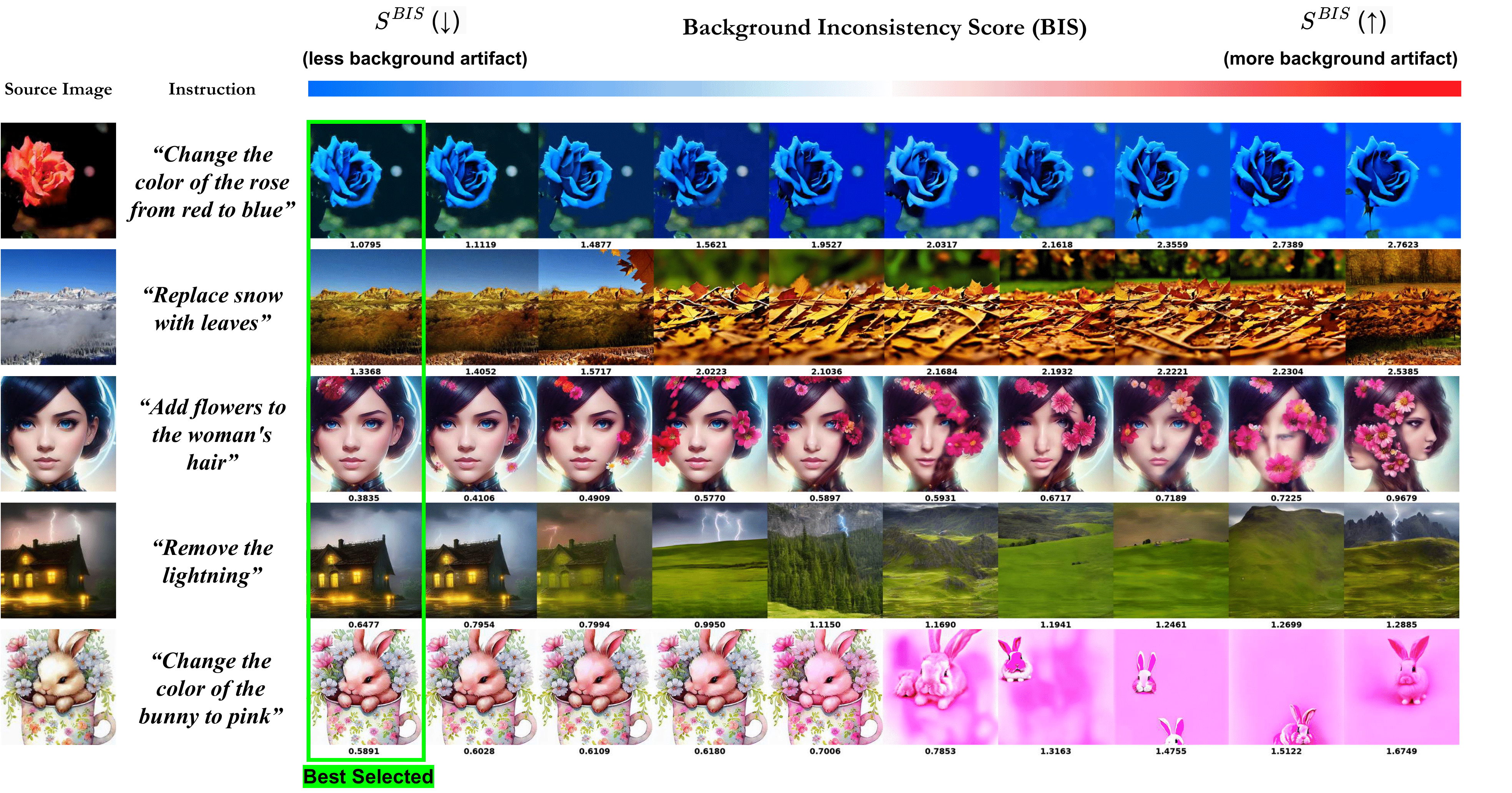

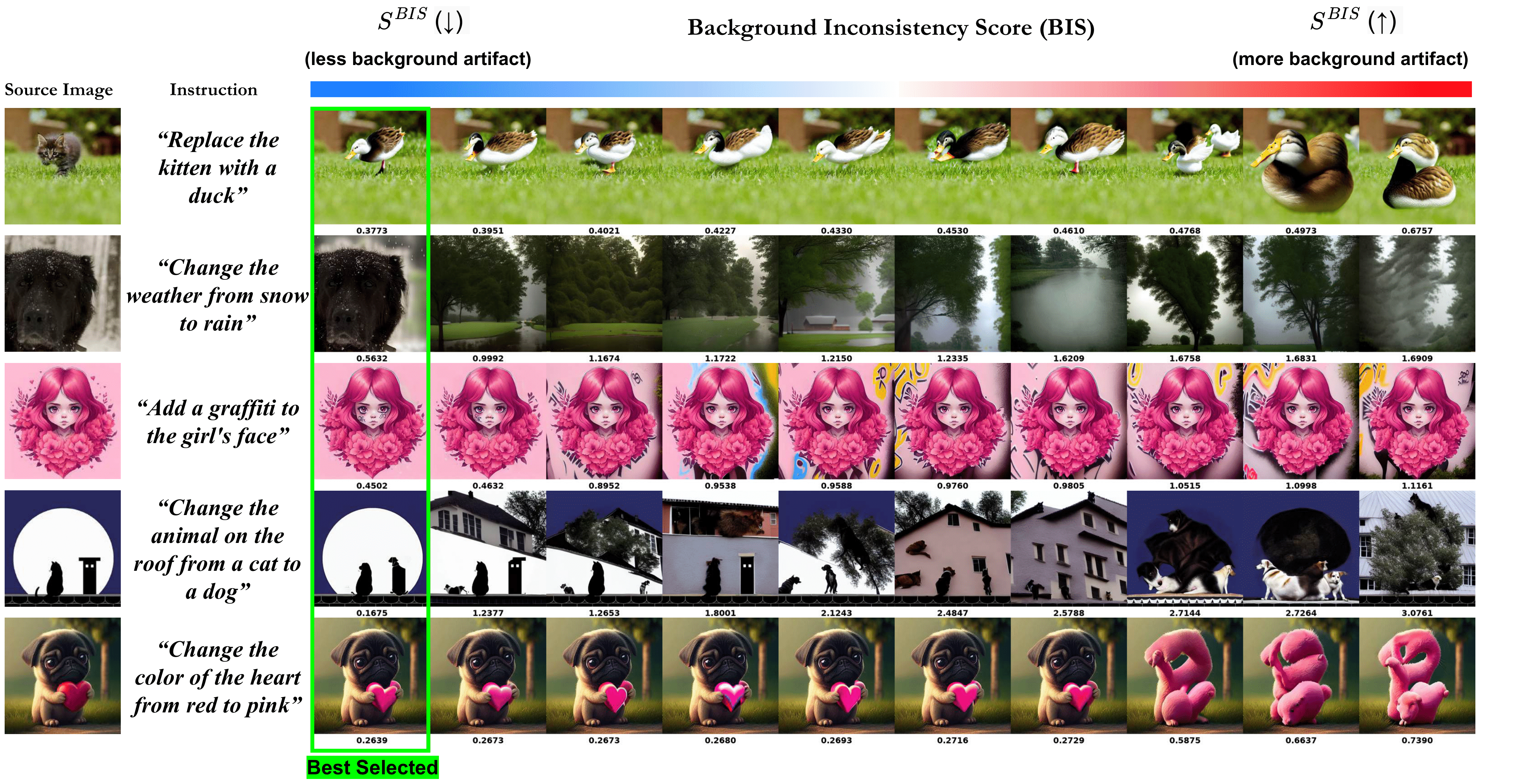

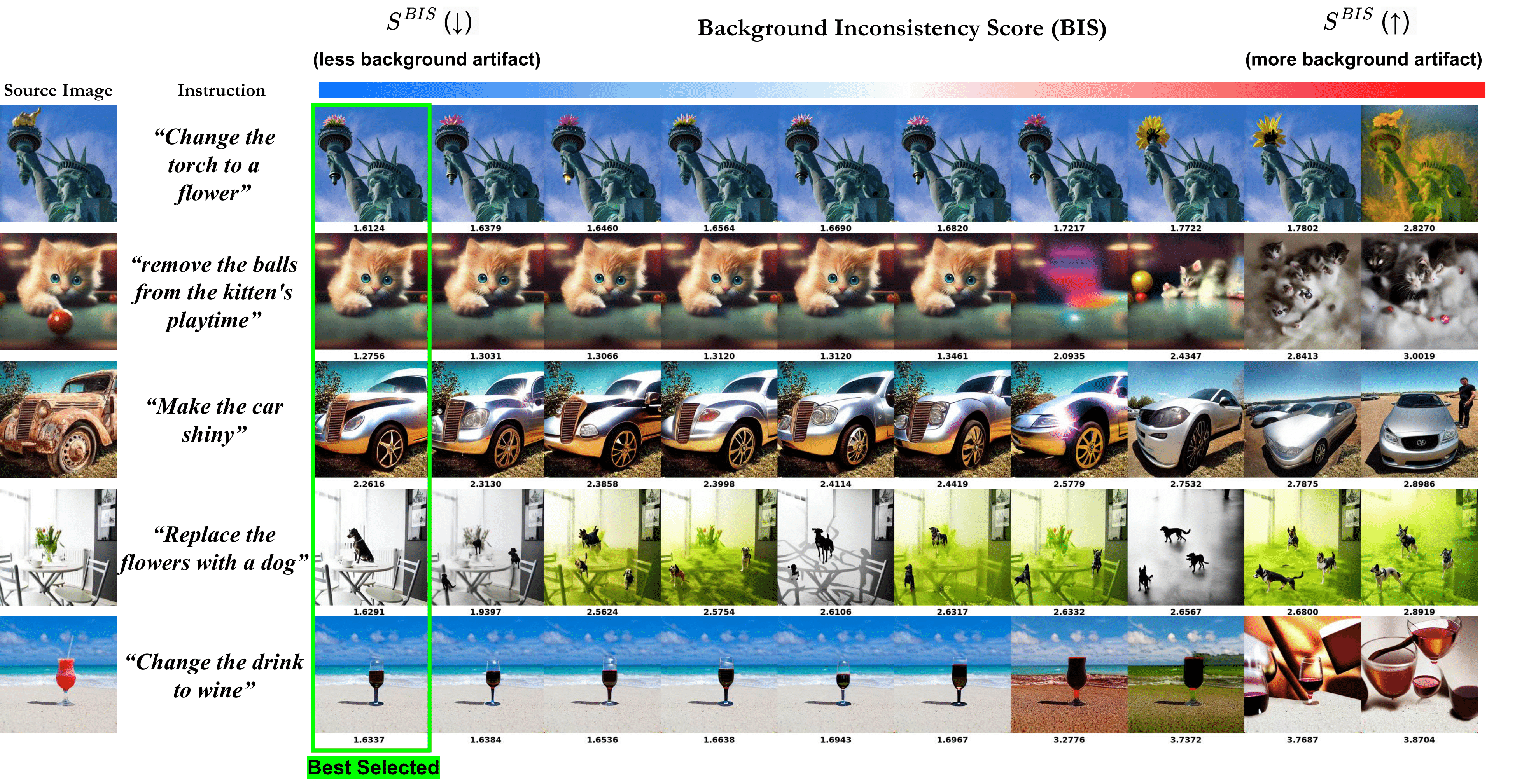

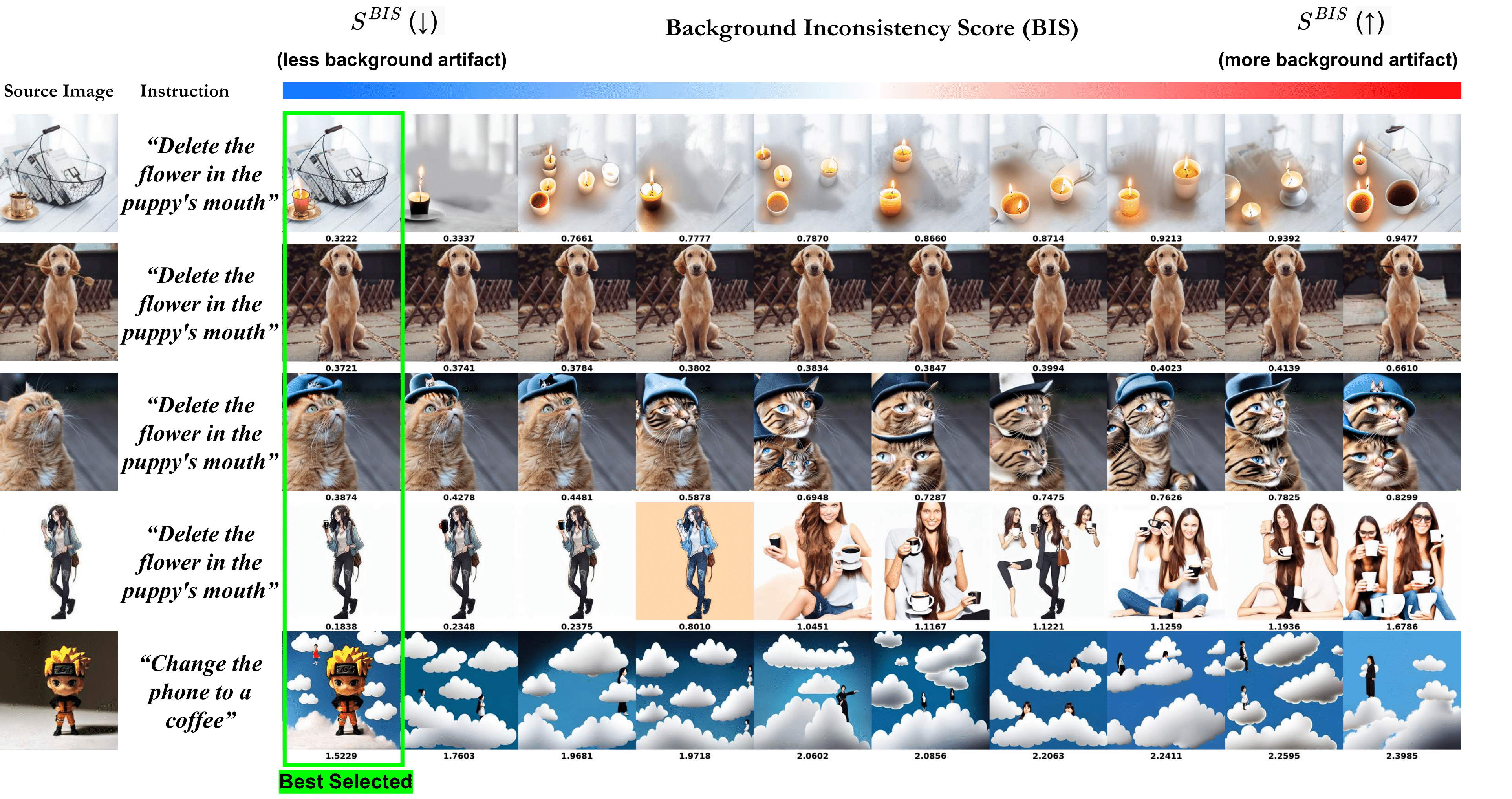

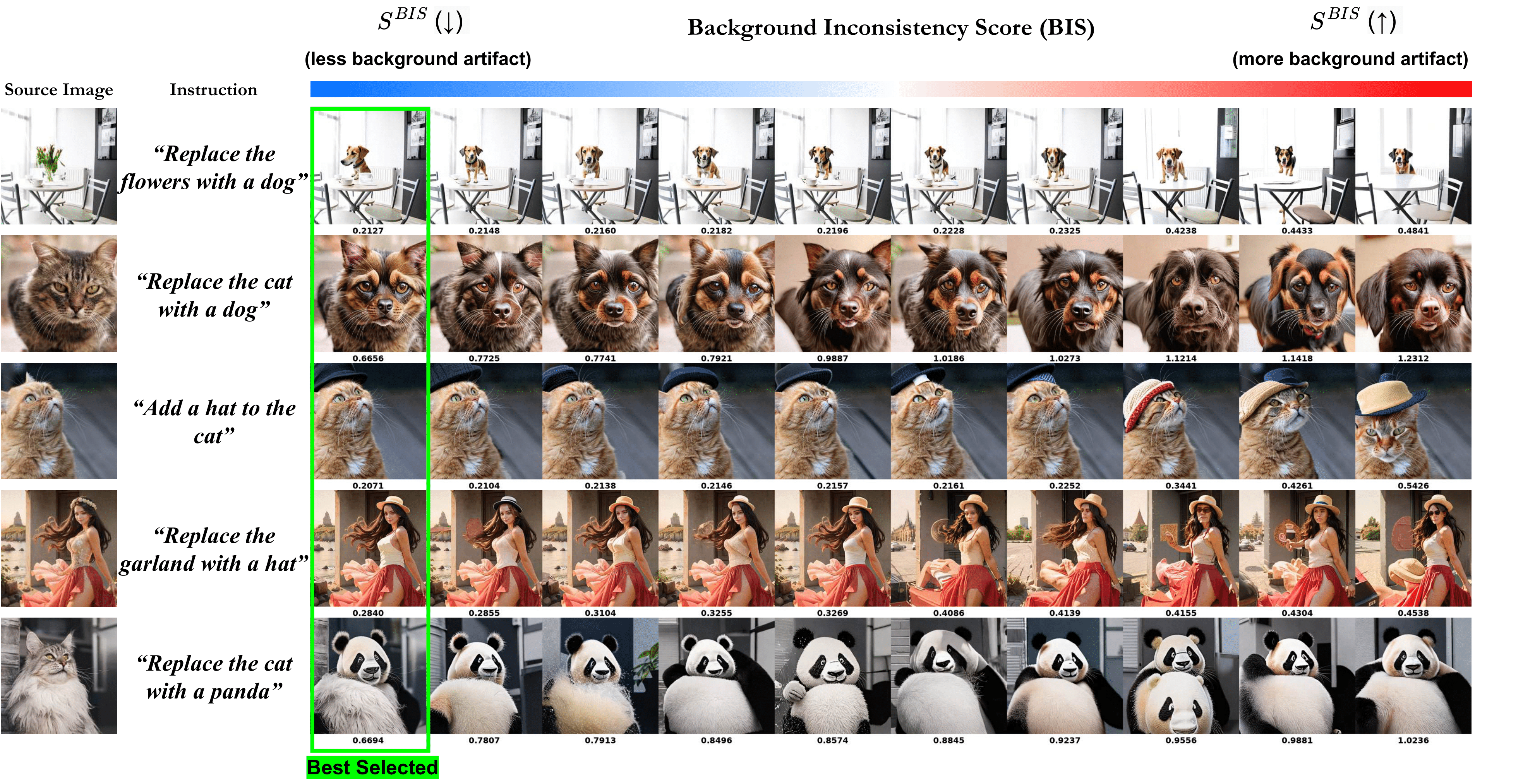

Despite recent advances in diffusion models, achieving reliable image generation and editing results remains challenging due to the inherent diversity induced by stochastic noise in the sampling process. Particularly, instruction-guided image editing with diffusion models offers user-friendly editing capabilities, yet editing failures, such as background distortion, frequently occur across different attempts. Users often resort to trial and error, adjusting seeds or prompts to achieve satisfactory results, which is inefficient. While seed selection methods exist for Text-to-Image (T2I) generation, they depend on external verifiers, limiting their applicability, and evaluating multiple seeds increases computational complexity, reducing practicality. To address this, we first establish a new multiple-seed-based image editing baseline using background consistency scores, achieving Best-of-N performance without supervision. Building on this, we introduce ELECT (Early-timestep Latent Evaluation for Candidate selecTion), a zero-shot framework that selects reliable seeds by estimating background mismatches at early diffusion timesteps, identfying the seed that retains the background while modifying only the foreground. ELECT ranks seed candidates by a background inconsistency score, filtering unsuitable samples early based on background consistency while fully preserving editability. Beyond standalone seed selection, ELECT integrates into instruction-guided editing pipelines and extends to Multimodal Large-Language Models (MLLMs) for joint seed + prompt selection, further improving results when seed selection alone is insufficient. Experiments show that ELECT reduces computational costs (by 41% on average and up to 61%) while improving background consistency and instruction adherence, achieving around 40% success rates in previously failed cases—without any external supervision or training.

We introduce ELECT, a model-agnostic and efficient framework for selecting high-quality edited images from diffusion-based editing pipelines. ELECT stops denoising early and selects the best candidate based on background consistency. Selection is driven by the Background Inconsistency Score (BIS): a relative metric that penalizes unintended background changes while ignoring the intended foreground edit, ensuring the chosen candidate delivers the cleanest, most consistent result.

ELECT halts all seeds at tstop, ranks them with BIS, and denoises only the top seed, preserving quality while sharply reducing compute.

ELECT extends to prompt selection by incorporating MLLMs, improving editing reliability when seed selection alone is insufficient.

We match cases with almost identical background MSE; even then, ELECT achieves slightly better quality. Its computational cost (NFE) grows only with the chosen seed count and early-stop step.

@InProceedings{kim2025early,

title={Early timestep zero-shot candidate selection for instruction-guided image editing},

author={Kim, Joowon and Lee, Ziseok and Cho, Donghyeon and Jo, Sanghyun and Jung, Yeonsung and Kim, Kyungsu and Yang, Eunho},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

year={2025}

}